Build Your Own Agents: Part 1

Build Your Own Agents!

Everyone who reads this should be building their own agents. At this point-in-time, "vibe-coding" (see note) your own personal software has an extremely high cost-benefit ratio.

The key is personal, building real products is hard, mostly because people are complicated, PMF is difficult to find, software is only a small part of a business etc. Building for yourself guarantees PMF, and you can focus on plethora of easy wins that are just a few prompts away.

Auto Agent

Auto Agent is my latest attempt to build my personal J.A.R.V.I.S. It began as my first ever completely vibe-coded project, auto-coder,bootstrapped in the anthropic console. Then evolved into auto-code2, the coding assistant which in some form has been my daily driver for a year.

That project got lost in a very long running branch optimistically called "refactor", a big part of the problem was trying to make it useful for other people. I had not yet learned that the future is hyper-personalised.

Auto-Agent is what I'm calling the latest iteration. I don't intend to share the code directly, but hopefully I can share the ideas. This intro just sets the scene, but I've been experimenting a lot with creating LLM-friendly so that future articles can be much more practical.

System Components

The goal is a loosely coupled system that can grow over time. I'll interact with it through various apps and integrations with the tools I already use (chrome, neovim, obsidian in particular).

Agents and Tools

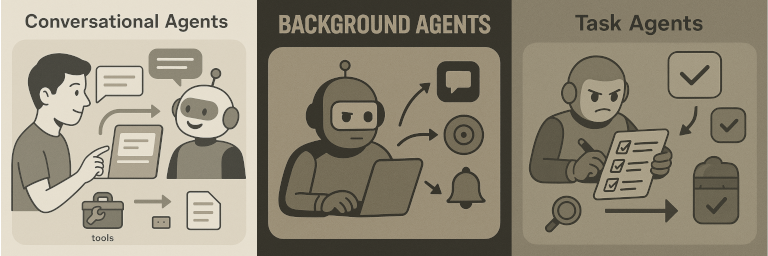

I'm using the term "Agent" quite loosely, the important thing is what kind of interface they provide. From that perspective the three main types of agents are:

- Conversational Agents - These are standard chat assistants capable of tool calling and handling artifacts. They serve as the primary interface for user interaction.

- Task Agents - Task agents are designed to perform specific tasks. They receive a task and its inputs and run until completion. An example is a "Deep Research" agent.

- Background Agents - These agents operate in the background, responding to external events like monitoring Linear, Slack etc.

Glue

Glue is the connecting layer between the agents and the frontends. Everything in this layer is based on the Actor model, which is a natural fit for agent architectures. Compared with using functions as the basic primitive, the Actor model has a few key advantages:

- Network Transparency: Agents may run in the same process as the frontend, or in their own process, on a docker container (for sandboxing), or in the cloud.

- Temporal Flexibility: Agents can be short-lived, or long-lived. Messages may be sent in realtime, or an agent may need to wait days for a response.

In addition, we can map the Actor model to the key technologies that will be used in this layer, including Model Context Protocol, Websockets, and the Cloudflare Agents platform (particularly Durable Objects).

Current Progress and What's Next

I've built the first version of a TUI chat application over a few days of vibe-coding (then a few weeks of refactor), this will be my main interface. I'll share an LLM-friendly guide to building your own TUI chat application in the next part of this series, then on to building more useful agents.

Note on vibe-coding

I love this note from Steve Yegge's excellect article - Revenge of the junior developer:

Brief note about the meaning of "vibe coding": In this post, I assume that vibe coding will grow up and people will use it for real engineering, with the "turn your brain off" version of it sticking around just for prototyping and fun projects. For me, vibe coding just means letting the AI do the work. How closely you choose to pay attention to the AI's work depends solely on the problem at hand. For production, you pay attention; for prototypes, you chill. Either way, it’s vibe coding if you didn’t write it by hand.